From Brains to Algorithms: How Does an Artificial Neuron Really Work?

The human brain might be the most complex phenomenon in the world. During my thesis research, I discovered that modern AI - from ChatGPT to image recognition - all rests on one tiny building block: the artificial neuron.

The human brain contains approximately 86 billion neurons. Artificial neural networks attempt to approximate this structure, albeit in a much simpler form.

The Structure of an Artificial Neuron

Let's explore how a neuron is built and what happens inside!

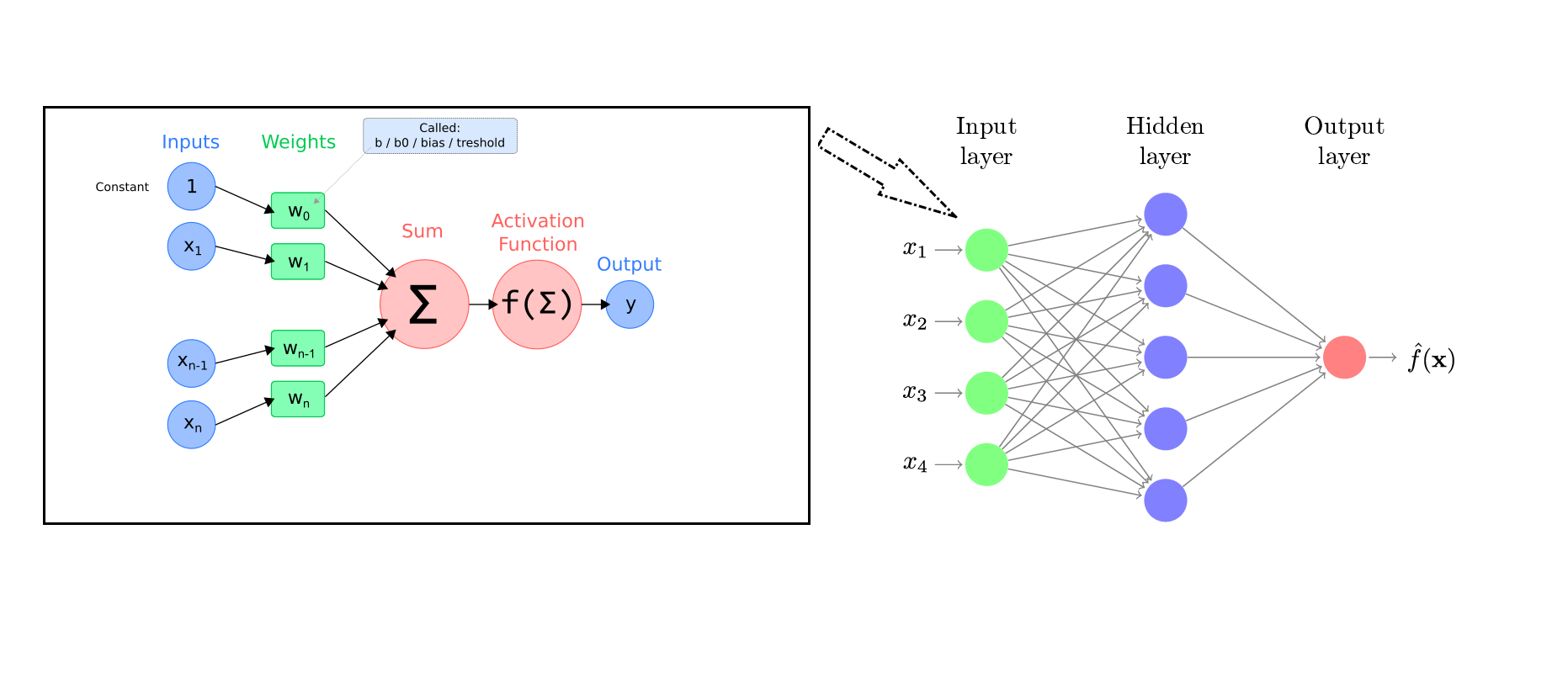

Inputs and Weights

Inputs are the data the neuron "sees" - could be a pixel value or outputs from other neurons. Each input has an associated weight that indicates how important that information is.

Imagine listening to experts' opinions - some carry more weight because they're more experienced. The neuron multiplies each input by its weight, then sums them up.

Here's the magic: when the network "learns," it's actually adjusting these weights over and over until it finds the best combination.

Bias and Activation

We add the bias to the sum - it's like a fine-tuning knob that helps the model fit data more flexibly.

Finally comes the activation function, the neuron's "switch." It decides how much the neuron should "fire." Why important? Because it enables the network to recognize complex, non-linear patterns, not just simple relationships.

Popular Activation Functions

Sigmoid: Scales output between 0 and 1 - ideal for probabilities ("what's the chance this is a cat?").

ReLU: The modern favorite. Simple: lets positive values through, sets negatives to zero. Despite its simplicity, it's incredibly effective.

One Neuron Isn't Enough

An isolated neuron can't do much alone. The real magic begins when we connect these units - just like in the human brain.

Different tasks require different architectures:

Feedforward Networks: Information flows in only one direction, like an assembly line.

Convolutional Networks (CNN): Champions of image processing. They look for local patterns - edges, textures. When your phone recognizes your face, it's likely using a CNN.

Recurrent Networks (RNN): They "remember" previous inputs, making them ideal for sequential data like language processing.

Why Does This Matter?

This principle - the artificial neuron and network structure - led us to today's applications. From spam filters to product recommendations to GPT and Claude - they all rest on this foundation.

When we chat with ChatGPT or Netflix recommends a movie, millions of artificial neurons are working in harmony behind the scenes.